Hook

You want tangible Transformer improvements that matter. DeepSeek delivers: new sparse attention, manifold hyper-connections, major speed gains, deeper insights.

What’s New from DeepSeek?

Native Sparse Attention (NSA)

At ACL 2025 DeepSeek introduced NSA, a sparse attention mechanism co-designed with hardware, boosting long-text processing speed by 11× while outperforming full-attention models. It pushes context length to 1 million tokens. That’s a leap in efficiency and capability.

Beyond raw speed, NSA maintains or surpasses model quality, redefining expectations for long-context Transformer performance.

Manifold‑Constrained Hyper‑Connections (mHC)

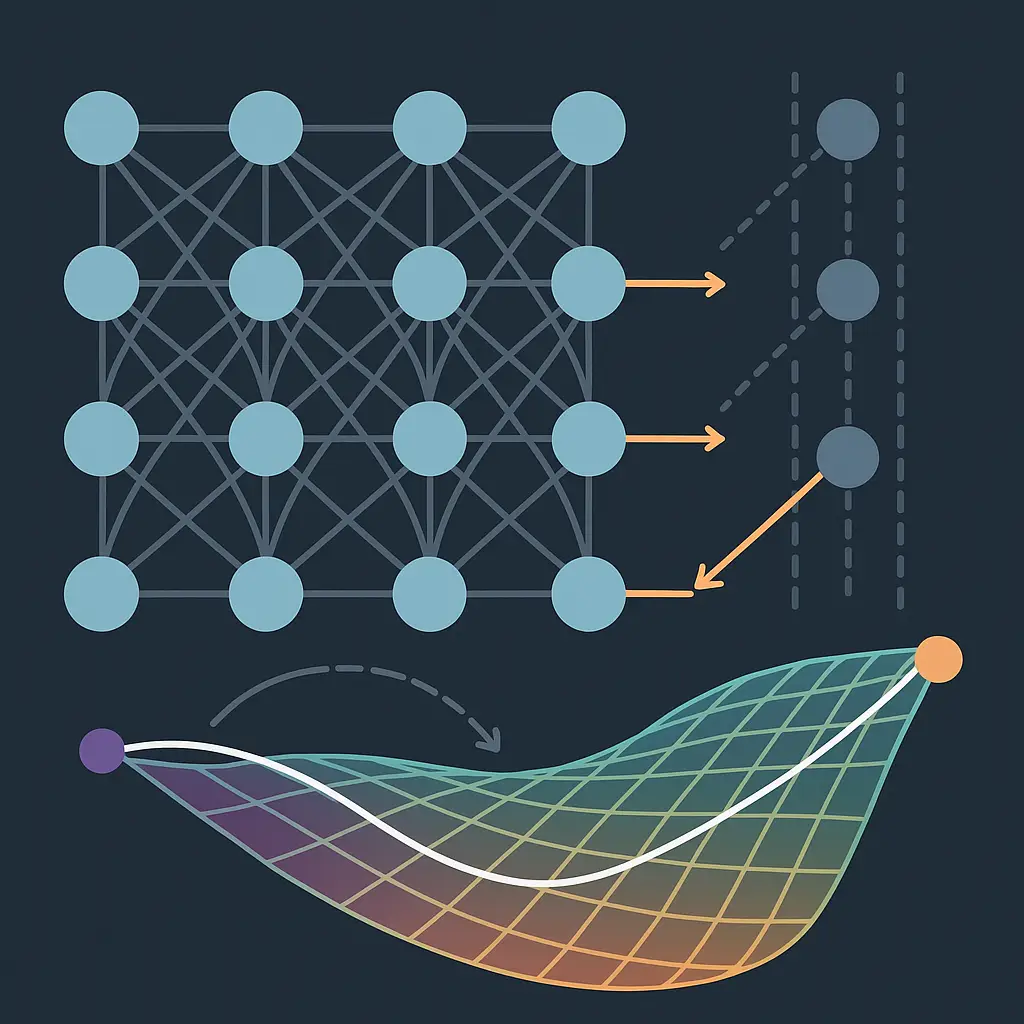

On January 2, 2026 DeepSeek rolled out “mHC: Manifold‑Constrained Hyper‑Connections,” a framework integrating manifold projections into hyper-connections. It tackles training instability, scaling bottlenecks, and memory overhead. Early results show tangible gains in efficiency and model performance.

Deeper Technical Innovations

Multi‑Head Latent Attention, MoE, MTP, GRPO

DeepSeek’s recent technical paper “DeepSeek: Paradigm shifts and technical evolution in large AI models” summarizes core algorithmic innovations:

- Multi‑Head Latent Attention (MLA) – promises richer representations via latent spaces.

- Mixture‑of‑Experts (MoE) – routes computation efficiently through conditional experts.

- Multi‑Token Prediction (MTP) – enables parallel token generation for faster decoding.

- Group Relative Policy Optimization (GRPO) – enhances reinforcement learning for structured reasoning.

They also report engineering breakthroughs in scaling, inference, and system-level design. Collectively, these innovations target performance, cost, and real-world deployment efficiency.

Broad Impact and Ecosystem Shift

DeepSeek’s open‑source, cost‑effective design shakes up the AI landscape. Comparisons with ChatGPT, Claude, Gemini show promise in structured reasoning, formal tasks, code generation, and diagnostics—though creative tasks and user safety still pose challenges.

With NSA and mHC on the horizon, DeepSeek positions itself as an alternative to proprietary models, accelerating democratization of AI research and access.

Looking Ahead

Watch for DeepSeek V4, expected in Q1 2026. NSA and mHC set the stage for more efficient, scalable, robust Transformers. Expect extended context, richer reasoning, and hardware-aware modeling.

Conclusion

DeepSeek’s recent breakthroughs—Native Sparse Attention and manifold-constrained hyper‑connections—drive real advances in efficiency, scalability, and Transformer capability. Their open-source stance speeds innovation across the board.

Projectchat.ai offers multimodal chat from all providers, image generation, and Agentic/Hybrid RAG over your own data—in dedicated workspaces. Explore smart AI workflows today: https://projectchat.ai/trial/